What is bounding box in computer vision?

The bounding box, usually abbreviated as bbox is an area defined by two longitudes and two latitudes where the latitude is a decimal number between -90.0 and 90.0 and the longitude is a decimal number between -180.0 and 180.0.

They usually follow this standard format:

bbox = left,bottom,right,top

bbox = min Longitude , min Latitude , max Longitude , max Latitude

A bounding box is essentially a rectangle that surrounds an object and specifies its location, class (for example, car or person), and confidence (the likelihood of it being in that location).

A bounding box is primarily utilized for the task of object detection. Here the goal is to identify the position and type of several objects in an image.

You could say that the bounding box is an abstract rectangle that serves as a reference point for object detection and generates a collision box for that object. These rectangles get drawn over images by data annotators, who identify the X and Y coordinates of the point of interest inside every image. This assists machine learning algorithms in finding what they are searching for, evaluating collision paths, and even helps in saving computational power.

Bounding boxes are among the most widely used image annotation techniques in deep learning. The bounding box technique can save resources and improve annotation performance substantially in comparison with other image processing approaches.

In fact, the bounding box technique is one of the least costly and time-consuming annotation approaches that you could use.

What are the conventions used in specifying a bounding box?

While specifying a bounding box, there are two main conventions that tend to be followed. These main conventions are mentioned below:

- Specifying the box with respect to the coordinates of its top left, and the bottom right point.

- Specifying the bounding box with respect to its center, and its width and height.

Let’s take an example of a car to understand these conventions.

Following the first convention, the bounding box would be specified according to the coordinates of the top left point and the bottom right point.

Following the first convention, the bounding box would be specified according to its center, width, and height.

What parameters are used to define a bounding box?

The parameters used are dependent on the convention that is being used to specify the bounding box. Here are the main parameters that are used:

- Class: This defines what the object present inside the bounding box is. For example - motorbike, house, etc.

- (x1, y1): This corresponds to the x and y coordinate of the top left corner of the rectangle.

- (x2, y2): This corresponds to the x and y coordinate of the bottom right corner of the rectangle.

- (xc, yc): This corresponds to the x and y coordinate of the center of the bounding box.

- Width: This defines the width of the bounding box.

- Height: This defines the height of the bounding box.

- Confidence: This shows how likely it is for the object to actually be present in that box. Eg a confidence of 0.9 would mean that there is a 90% likelihood of the object actually existing in that box.

How do you convert between the conventions?

It is possible to convert between the different forms of representing the bounding box, depending on your use case.

- xc = ( x1 + x2 ) / 2

- yc = ( y1 + y2 ) / 2

- width = ( x2 — x1)

- height = (y2 — y1)

How is the bounding box used for object detection?

The bounding box helps computers know what an object is and where it is to detect it in an image. As an example, autonomous vehicles would number other vehicles and have a bounding box drawn around them by an annotator. This aids in the preparation of an algorithm to identify various types of vehicles.

This enables self-driving vehicles to drive through busy streets by annotating items such as vehicles, traffic signals, and pedestrians. Perception models have a heavy dependence on the bounding boxes to make this happen.

However, a single bounding box doesn’t guarantee a flawless prediction quality. For enhanced target tracking, you need a vast range of bounding frames, and data augmentation techniques.

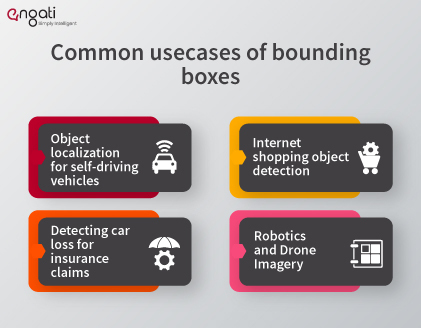

What are some common usecases of bounding boxes?

Object localization for self-driving vehicles

The bounding boxes are generally used to train autonomous vehicle vision models to identify different types of artifacts on the road, such as traffic signals, lane barriers, and pedestrians, among other items. Both identifiable obstacles can be easily annotated using bounding boxes to enable robots to recognize their environments and drive the car in a safe manner and prevent collisions, even when driving on congested streets.

Internet shopping object detection

Products sold online are also annotated with bounding boxes to recall what clothing or other accessories buyers are wearing. This technique can be used to annotate all forms of fashion accessories, enabling visual search machine learning models to identify them and provide additional knowledge to end-users.

Detecting car loss for insurance claims

It is possible to track types of vehicles like cars and bikes that have been damaged in an accident by making use of bounding box annotated images. ML models can use bounding boxes to learn the intensity and position of losses to predict the cost of lawsuits so that a client can provide an estimate before making a lawsuit.

Robotics and Drone Imagery

Image annotation is widely used to mark items from the viewpoint of robots and drones. Autonomous vehicles like robots and drones can classify several objects on the planet by making use of photographs annotated with this technique.